Contexte

En cherchant quel client de mon réseau domestique consomme de la bande passante et en essayant de capturer / déboguer certaines attaques et flux réseau, j'ai joué avec la passerelle Unifi (modèle UniFi Security Gateway 3P) pour exporter des données netflow vers un collecteur netflow pmacct / nfacctd.

Configuration

Vous devez d'abord définir / configurer les paramètres de flow-accounting sur la passerelle Unifi?

CLI

Pour les tests, vous pouvez configurer temporairement l'exporter netflow en CLI / SSH sur la passerelle. Attention, cette configuration risque d'être écrasée à n'importe quel moment par le contrôleur Unifi.

user@gw:~$ configure

[edit]

user@gw# show system flow-accounting

ingress-capture pre-dnat

interface eth0

interface eth1

netflow {

sampling-rate 10

server 192.168.1.100 {

port 6999

}

timeout {

expiry-interval 60

flow-generic 60

icmp 300

max-active-life 604800

tcp-fin 300

tcp-generic 3600

tcp-rst 120

udp 300

}

version 9

}

syslog-facility daemon

[edit]

Contrôleur Unifi

Pour déployer la configuration automatiquement, par le contrôleur Unifi, et ainsi rendre cette configuration permanente, vous devrez créer un fichier config.gateway.json pour automatiser son déploiement à chaque provisionning. Vous devez le placer dans le site ID correspondant à votre déploiement.

✘ ⚡ root@controller /var/lib/unifi/sites/mysiteID cat config.gateway.json

{

"system": {

"flow-accounting": {

"ingress-capture": "pre-dnat",

"interface": [

"eth0",

"eth1"

],

"netflow": {

"sampling-rate": "10",

"server": {

"192.168.1.100": {

"port": "6999"

}

},

"timeout": {

"expiry-interval": "60",

"flow-generic": "60",

"icmp": "300",

"max-active-life": "604800",

"tcp-fin": "300",

"tcp-generic": "3600",

"tcp-rst": "120",

"udp": "300"

},

"version": "9"

},

"syslog-facility": "daemon"

}

}

}

Avec cette configuration, vous allez recevoir sur l'IP du serveur sur le port 6999 un flux UDP comportant votre export netflow, avec un 1 paquet exporté sur 10. Cela signifie que 1 paquet sur 10 passant par la gateway va être exporté en netflow. Cette valeur doit être correctement évaluée pour éviter de saturer le petit CPU des passerelles Unifi et rendre la corrélation possible.

Collection des données

J'ai toujours été un fan du projet pmacct:) Naturellement, j'ai donc déployé un démon nfacctd sur mon serveur personnel pour collecter les exports netflow et les agréger en utilisant quelques primitives.

user@home ~ cat /opt/pmacct/etc/nfacctd.conf ! nfacctd configuration for home ! ! nfacctd daemon parameters ! for details : http://wiki.pmacct.net/OfficialConfigKeys ! daemonize: false pidfile: /var/run/nfacctd.pid logfile: /var/log/nfacctd.log syslog: daemon core_proc_name: nfacctd ! !interface: enp0s25 nfacctd_ip: 192.168.1.100 nfacctd_port: 6999 !nfacctd_time_new: true !nfacctd_disable_checks: true !nfacctd_renormalize: true nfacctd_ext_sampling_rate: 10 geoip_ipv4_file: /usr/share/GeoIP/GeoIP.dat geoip_ipv6_file: /usr/share/GeoIP/GeoIPv6.dat !aggregate[traffic]: in_iface,out_iface,proto,tcpflags,ethtype,src_host_country,dst_host_country,src_host,dst_host aggregate[traffic]: in_iface,out_iface,proto,tcpflags,src_host_country,dst_host_country,src_host,dst_host ! storage methods plugins: print[traffic] ! print rules print_output_file[traffic]: /tmp/nfacctd/%s.json print_output: json print_history: 2m print_history_roundoff: m print_refresh_time: 60 plugin_pipe_size: 102400000 plugin_buffer_size: 102400

Après avoir installé pmacctd et configuré le démon dans le fichier nfacctd.conf , vous devriez être capable de visualiser / récupérer les exports netflow. Soit en utilisant un pipe in-memory, soit en utilisant un export JSON.

Exemple de JSON généré par nfacctd :

{

"event_type": "purge",

"iface_in": 2,

"iface_out": 3,

"ip_src": "207.180.192.205",

"ip_dst": "192.168.1.101",

"country_ip_src": "DE",

"country_ip_dst": "",

"tcp_flags": "0",

"ip_proto": "udp",

"stamp_inserted": "2020-08-20 11:28:00",

"stamp_updated": "2020-08-20 11:29:01",

"packets": 1,

"bytes": 125

}

{

"event_type": "purge",

"iface_in": 3,

"iface_out": 2,

"ip_src": "192.168.1.101",

"ip_dst": "5.187.71.162",

"country_ip_src": "",

"country_ip_dst": "RU",

"tcp_flags": "0",

"ip_proto": "udp",

"stamp_inserted": "2020-08-20 11:28:00",

"stamp_updated": "2020-08-20 11:29:01",

"packets": 1,

"bytes": 311

}Export des données

Une fois les données reçues, vous pouvez alors traiter les données produites par nfacctd et les insérer dans n'importe quelle TSDB.

Python - JSON vers InfluxDB

Pas sûr qu'InfluxDB soit le meilleur moyen technique pour pousser des netflows, à cause de la forte cardinalité des tags. Cependant, pour la maison, cela suffit largement :)

J'ai donc écrit un rapide script python qui parse et enrichit ces données (comme le remplacement des index SNMP des interfaces par leur nom) et ensuite je pousse ces données dans InfluxDB.

Je partagerai sûrement ce script sur github une fois qu'il sera un peu plus propre !

Grafana

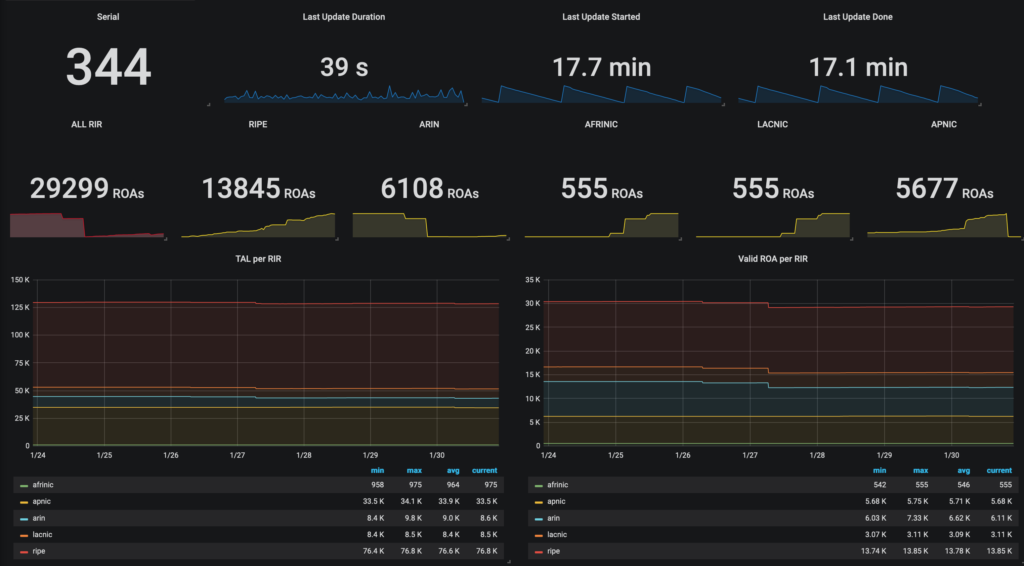

Voici un petit exemple de ce qu'il est possible enfin de générer avec Grafana et quelques requêtes dans l'InfluxDB. Top Pays, Top du trafic par IPs ...